How can we combat the spread of COVID-19 misinformation?

by Jon Kuiperij – May 31, 2021

by Jon Kuiperij – May 31, 2021

In Take 5, Sheridan’s thought leaders share their expert insight on a timely and topical issue. Learn from some of our innovative leaders and change agents as they reflect on questions that are top-of-mind for the Sheridan and broader community.

Misinformation about the cause, scope and treatment of COVID-19 has presented serious public health risks throughout the pandemic. Sheridan professors and researchers Dr. Nathaniel Barr (pictured above left) and Dr. Michael McNamara (pictured above right), in collaboration with partners MediaSmarts, BEworks and some of the world’s foremost misinformation researchers, are lead investigators on a research project that will design, test and disseminate creative interventions aimed at combating the online spread of misinformation. In this installment of Take 5, Nathaniel and Michael explain the causes and dangers of COVID-19 misinformation, strategies to suppress it and more.

1. Why is COVID-19 misinformation such a big issue?

There’s a huge amount of misinformation flying around online about the pandemic. Research, including some we’ve published, suggests holding beliefs that are tied to this misinformation is leading to a lack of compliance with what we need to do as a society to move forward — whether it be social distancing or choosing to get a vaccine, whether it’s inaction or direct opposition in the face of what’s needed. What we need is a collective societal response, and misinformation can be a barrier.

2. Who or what is to blame for all of this misinformation?

There are lots of causes. Probably the most obvious is the attention economy that operates in social media and online environments, which is oriented towards things that are enticing or intriguing and not necessarily towards truth. There can be a reputational currency that is earned through sharing certain types of content: sometimes it’s to be seen as a provocateur, sometimes it’s to be in the conservative camp or whatever the moral or social group is that you’re trying to impress. Those can be motivating factors.

In addition to people who are relatively unconcerned about the truth and are just looking for clicks or attention without having a strong horse in the race, it is important to remember that there are individuals or groups that are responsible for sharing, for various reasons, outright disinformation — content that is specifically intended to deceive.

Some of this is also an interesting consequence of the global reach of media and networks, meaning that content we see is now available around the world. This is a global problem, and not one that Canada is immune to.

3. Have there been any commonalities or trends you’ve seen in COVID-19 misinformation as the pandemic has progressed from discovery to prevention to vaccines?

We’ve seen people believe a wide range of things, from believing COVID-19 is just a little worse than a flu, to believing the pandemic is a ploy to crush small business and prop up the giants of our economy, to believing that 5G nanobots are being injected into our bloodstreams as part of Bill Gates’ master plan.

While the nature of these beliefs is worrisome, what is perhaps more alarming is how pervasive they are. A recent report from BEworks showed that even those who were relatively high in vaccination intent held some form of conspiracy belief. Significant proportions of the populace endorse such views and given the established connection to adherence to public health guidelines and vaccination intent, we are looking at a significant challenge.

4. What is the best way to combat the spread of COVID-19 misinformation?

Our project hopes to limit the impact of COVID-19 misinformation by tackling its more casual spreaders. We’re not looking to tackle the construction of this stuff, nor are we trying to debunk deeply-held conspiratorial beliefs. Our aim is to stop people who aren’t heavily invested in these beliefs from further polluting the informational ecosystem. But the only way to truly know if an intervention will work is to test it scientifically. There have been many really well-intended and seemingly intuitive and obvious solutions that haven’t necessarily worked.

Our research project has been unique from many other efforts in that we start with behavioural insights, which are rooted in findings from the scientific literature about why people think and act the way they do, and then leverage the creativity of Sheridan arts alumni to manifest that idea in a dynamic way. Our work here is based around creatively extending research that was recently published in Nature and led by our collaborators Gordon Pennycook and David Rand, which showed that people aren’t often thinking about accuracy when sharing online and that simply prompting them to consider whether something is true makes them more discerning in what they share. We then take those designs and present participants with these interventions, have them view real and fake COVID-related headlines, and indicate the extent to which they’d be willing to share this stuff online.

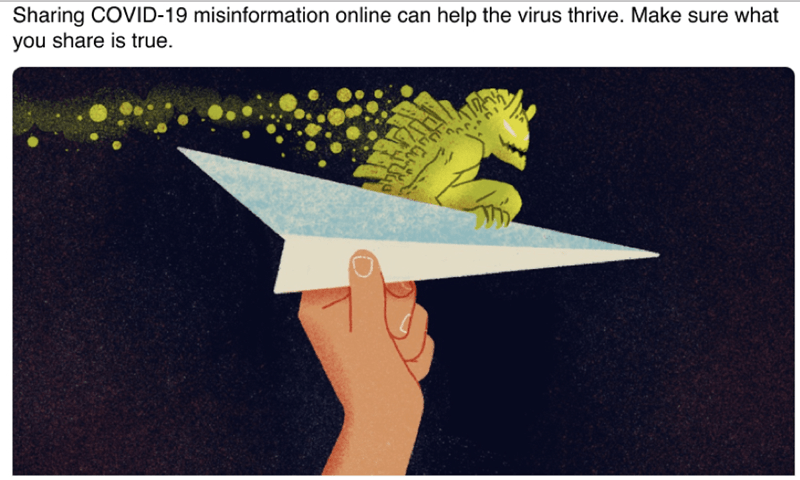

Above (top): Illustrator: Drew Shannon; Creative Direction: Erin McPhee. Above: Illustrator: Genevieve Ashley; Creative Direction: Erin McPhee

Based on our Phase 1 results, we believe that these illustrations and interventions could be effective. Some work and some don’t, and an important part of our subsequent research will be trying to determine and isolate variables or factors within designs that were more effective. A lot of misinformation seems to come in the forms of visuals — things like pictures, memes and cartoons that are visually arresting and compelling. Part of the story for us is that the corrective mechanisms should at least be as compelling as what they are fighting.

5. How should people decide who to believe or rely on for COVID-19 information?

This intersects with a broader societal trend: to what extent do we value experts?

A simple rule of thumb would be to look to some of the official bodies — in the United States, it’s the Centers for Disease Control and Prevention; here in Canada, it’s our public health officials and researchers — and people who have systematically and scientifically studied this, rather than relying on what your Uncle Rick says on Facebook. In the spirit of MediaSmarts’ recent campaign Check then Share, it’s wise to check your sources to see if they align with expert consensus before sharing.

That said, we’ve observed a generally-declining level of trust over the past decade between the public and public institutions. In 2020 alone, an Edelman Trust Barometer report graphed a 3% decline in Canadians’ level of trust in core institutions of business, government, media and non-governmental organizations. This can make it much more difficult in the broader conspiracy realm, where many feel that if the leading public health officials say something, it is probably false.

Ultimately, any time people encounter some information related to COVID-19, they should stop to consider where that information came from and the incentives of the person or organization sharing it. Then, simply, think about whether it’s true.

Dr. Nathaniel Barr and Dr. Michael McNamara are both professors of Creativity and Creative Thinking in Sheridan’s Faculty of Humanities and Social Sciences, fusing the institution’s artistic and design capabilities with expertise in the science of human behaviour. In 2020, Nathaniel and Michael were awarded a Natural Sciences and Engineering Research Council (NSERC) College and Community Social Innovation Fund grant supporting efforts to drive social innovation with regional non-profits, as well as a NSERC COVID-19 Rapid Response grant aimed at combatting online misinformation surrounding the pandemic.

Nathaniel has been recognized as a ‘Global expert in the debunking of misinformation’ on the University of Bristol’s post-truth portal and has actively published and collaborated on COVID-19 related research, including the correlates of hoax beliefs and the factors contributing to vaccine hesitancy.

Michael has considerable applied research expertise, including past roles as Dean, Applied Research at Seneca College and Director, Applied Research at Northern Alberta Institute of Technology. During his time at Sheridan, Michael was Project Director on the ‘Community Ideas Factory’, a three-year collaborative research project that leveraged Sheridan’s research capabilities and expertise in social science research and creative problem-solving to support social innovations in Halton’s philanthropic sector.

Interested in connecting with Dr. Nathaniel Barr, Dr. Michael McNamara or another Sheridan expert? Please email communications@sheridancollege.ca.

The interview has been edited for length and clarity.

Media Contact

For media inquiries, contact Sheridan’s Communications and Public Relations team.